Topic Modelling with Language Transformers

Kuang Hao, Research Computing, NUS IT

Introduction

A recurring subject in text analytics is to understand a large corpus of texts through topics. During the analysis of social media posts, online reviews, search trends, open-ended survey responses, understanding the key topics will always come in handy. However, this task becomes complex when delegated to humans alone. In NLP (Natural Language Processing), topic modelling refers to the task of using unsupervised learning to extract main topics that occur in a collection of documents. It is frequently used in text mining for discovering hidden semantic structures in a text body. [1]

The language transformer is a novel architecture first introduced in the paper “Attention is all you need” in 2017. Used primarily in the field of NLP, [2] transformers have rapidly become the model choice for many NLP tasks, as it outperforms previous language models in mainstream benchmarks.

In this article, we will explore how the performance of topic modelling can be boosted with the use of transformers.

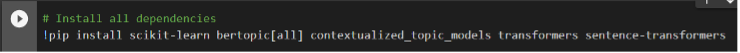

All packages needed to install:

LDA

The most commonly used algorithm in topic modelling is LDA (Latent Dirichlet Allocation). The goal of LDA is to map all documents to the topics in such a way that the keywords in each document are mostly captured by those topics. LDA is explained clearly in this article which you can explore if interested. LDA itself does not make use of transformers, but it is a simple algorithm and thus often functions as a baseline model.

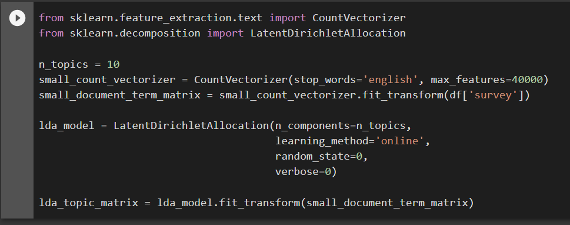

LDA is included in Python’s popular machine learning library, sklearn. In traditional LDA, we need to preprocess our text using count vectorization, which is also available in sklearn. After CountVectorizer, texts will be converted to a matrix which contains all the token counts (term frequency). One thing to note is that tf-idf vectorizer, another popular text vectorization tool, works poorly in use cases like topic modelling where the similarity between the words matters. [3] After preprocessing, the matrix can then be fed into the LDA model.

As shown in the code snippet above, we need to explicitly specify the number of topics before training the model. It’s usually hard to decide that number beforehand. A common practice is to choose a number large enough and perform dimension reduction as needed after training.

BERTopic

One common use of transformers is text vectorization (a.k.a. contextual word embedding). BERTopic is such a package that leverages one of the most popular transformer model: BERT (Bidirectional Encoder Representations from Transformers) in topic modelling. [4] After word embedding, it uses c-TF-IDF algorithm for topic modelling.

As you can see, BERTopic is wrapped up nicely for ease of usage. We can set nr_topics=’auto’ for BERTopic to automatically find the optimal number of topics. By specifying n_gram_range, BERTopic will not only work on words, but also n-grams. top_n_words is the number of key words/n-grams extracted for each topic. Another good feature is visualization, and we can visualize topics with its built-in function visualize_topics():

We can even use other word embeddings, by specifying this parameter: embedding_model. You can check out all the possible models to use from the sentence-transformers here. To check keywords for the nth topic, use model.get_topic(n).

You can visit their documentation page for more details.

Contextualized TM

Contextualized Topic Modelling provides a combined method that make use of both transformers and old-school bag-of-word model (count vectorization). [5]

In preprocessing, we need to input not only the raw data, but also the tokenized data with custom stop words removed:

It provides a built-in function create_training_set() where we need to set a transformer to use, input the above 2 versions of our dataset, and this function will do the trick. Like LDA, we need to set the number of topics in n_components before training.

After training, to check keywords for the nth topic, use ctm.get_topics()[n].

You can visit their documentation page for more details.

Topic Summary

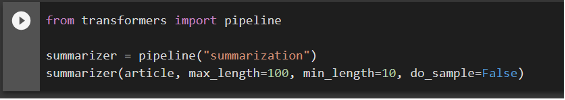

Apart from embeddings, transformers can also help in the summary part. In traditional topic modelling, key phrase extraction is usually a headache after topics are found. Now with transformers, we have a built-in summarization pipeline ready to use.

An example is to summarize the most relevant articles in a topic, and then we can use the summarization result to represent that topic. This is usually better than keyword extraction functions that are available in many TM algorithms, as the summarization output from transformers are more readable.

Conclusion

This article introduces how modern language transformers can be used in topic modelling. Building on top of traditional topic modelling algorithms, transformers can optimize text vectorization as well as the final summarization output. In practice, topic modelling is still a difficult task as finding topics in massive documents is never easy for a human. But to incorporate deep learning with topic modelling further, we would still have to wait for some research breakthroughs. Currently, human intervention is inevitable in topic modelling as some manual checks are still required in the evaluation phase.

We hope this article can help you with your language modelling journey.

On HPC

To use the above packages on HPC clusters, simply install it as a user package. The manuals for using Python on HPC clusters can be found here (CPU) and here (GPU). One thing to note is that when transformers are only used as word embeddings, GPU will not accelerate. In the above cases, the main model used for topic modelling are still LDA or c-TF-IDF, so running on CPUs is sufficient.

Contact us if you have any issues using our HPC resources, via A.S.K.

Reference

[1]. WikiPedia. (2021).“Topic Model”. [online] Available at: https://en.wikipedia.org/wiki/Topic_model

[2]. Vaswani, Ashish, et al. “Attention is all you need.”, Advances in neural information processing systems. (2017).

[3]. StackOverflow. (2017). “Necessary to apply TF-IDF to new documents in gensim LDA model?”. [online] Available at: https://stackoverflow.com/questions/44781047/necessary-to-apply-tf-idf-to-new-documents-in-gensim-lda-model/44789327#44789327

[4]. Maarten Grootendorst, . “BERTopic: Leveraging BERT and c-TF-IDF to create easily interpretable topics.”. (2020).

[5]. Federico Bianchi, et al. “Pre-training is a Hot Topic: Contextualized Document Embeddings Improve Topic Coherence”. arXiv preprint arXiv:2004.03974. (2020).