Projecting 2020 HPC Trends

by Tan Chee Chiang, Research Computing, NUS Information Technology.

Based on current market trends and technology roadmaps from market leaders, we can expect greater convergence of HPC and AI technologies, more processor (CPU) and accelerator options, further adoption of HPC Cloud and higher demand for storage capacity in 2020.

HPC and AI Convergence

At the latest round of SC2019 (Supercomputing) Conference at Denver Colorado in November 2019, about 15% of panel sessions, 21% of presented papers and 42% of invited talks were focusing on AI, Machine Learning or Data Analytics topics. The statistics suggested a high degree of common technological interests among the HPC and AI practitioners.

This is good news to HPC resource and service providers like us at NUS IT where we have to transform our capability and expertise to cater for new AI requirements. As the existing HPC centric capability has to be extended to support AI applications, having more common technologies (hardware or software) that can be used to accelerate both HPC and AI applications will definitely shorten the transformation process. Let’s look at some of the developments that are benefiting both the HPC and AI community.

More CPU/GPU/Accelerators options

We can expect new generation of CPU with more cores (from Intel, AMD and IBM) and greater energy efficiency (from ARM) to be delivered to the market as part of HPC-AI solution in the coming year.

For HPC-AI accelerator such as GPU, we will have new options from AMD and Intel besides those from Nvidia.

We are also looking forward to the launch of a new chip, the Intelligence Processing Unit (IPU) from Graphcore, which claims to offer 100-fold better AI/Machine Learning performance than GPU or other accelerators. The new chip is expected to be introduced early this year at Azure Cloud.

With more options and hence greater competition among suppliers, one obvious benefit to users is the lower cost. However, such new options also introduce new challenges, particularly in software compatibility or the lack of it. It took many years for GPU providers to develop and port enough software to make the accelerator popular among HPC community. As Industry 4.0 revolution is driving the technology development at a breakneck speed now, any new HPC-AI technology will have to gain adoption quickly to be successful. Some of such efforts shared at SC2019 include oneAPI (software tools that allow codes to be written once and run on different processing resources) from Intel, ROCm (an open-source HPC platform for GPU computing) from AMD and Tensorflow support from Graphcore. We will have to wait and see how these new technologies will impact the HPC-AI market.

More Focus on Data Storage and Preparation

With rapid expansion of HPC-AI application, greater data storage demand in the coming year is a given. At NUS IT, we provide variety of storage solutions to cater for the various storage demands. We provide high-speed SSD storage to accelerate ML/DL on GPU system, Hadoop based Data Repository and Analytics System (DRAS) to support Big Data analytics and Utility Storage Service for data storage.

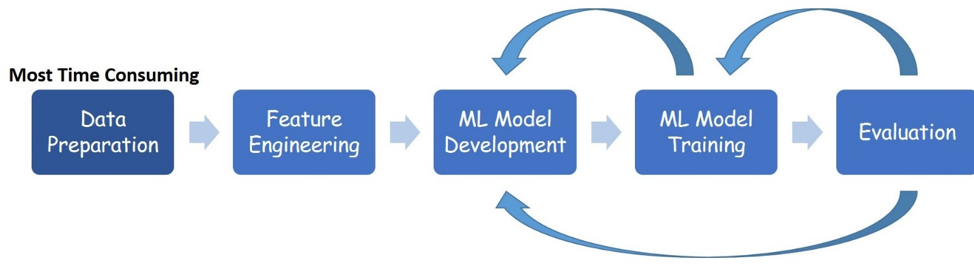

The other aspect of HPC-AI development that will gain more attention will be the time-consuming data preparation effort. For a typical Machine Learning/Deep Learning (ML/DL) project, data preparation effort such as data collection, exploring, cleaning and transformation can consume up to 80% of the total project time. Therefore, it makes sense to invest more effort in automating this process.

The Data Engineering team at NUS IT was set up as part of the HPC support team more than a year ago. The team provides technical support/consultation in both data preparation and Big Data/ML/DL implementation. The team also conduct regular R/Python/ML/DL trainings. NUS staff and students can register as a HPC user at our HPC Website to receive the training notifications.

HPC-Cloud Adoption

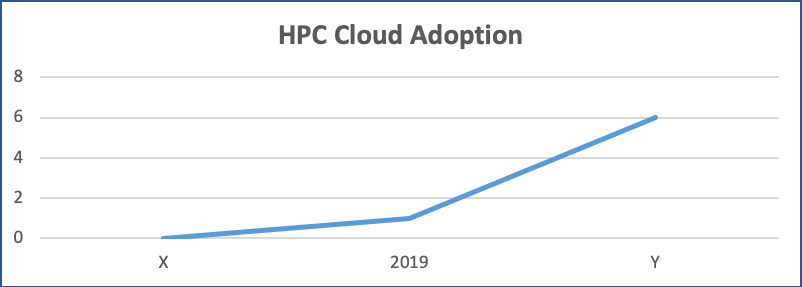

2019 had been identified by market analysts as a tipping point year where they have observed a significant jump in HPC Cloud adoption. Even with that increase in adoption, the HPC workloads migrated to the Cloud remains at a low percentage level of around 20%. The potential showstoppers are data transfer performance and cost.

At NUS IT, we are migrating the less data-intensive CPU workloads to the Cloud. We plan to tap into the Cloud scalability and agility features to cut user job queuing time to the minimum. For the data-intensive GPU workload, we will continue to support it in-house until a cost-effective Cloud solution is available.

Container for HPC?

The adoption of container technologies is expected to expand along with the increase in ML/DL workloads. Can container technology be a catalyst to drive the convergence of HPC and AI further? What benefit will it bring if both HPC and AI workloads can be containerised? Will it help in our Cloud migration? My colleague at NUS IT will be pondering over these questions in the coming months.

For any HPC-AI consultation and support, please contact us using the A.S.K. portal.