On-Going Deep Learning Research Works in NUS

By Wang Junhong, Research Computing, NUS Information Technology

Artificial Intelligence (AI) and deep learning became one of the hottest topics not only in the industrial and real life application development but also in the research domain. In NUS, researchers from different departments are working on research projects such as stereo matching, quantum many-body systems, computer vision, image classification, natural language processing and others by applying the AI and deep learning methodology. In this article, it is our pleasure to have three very active researchers sharing their on-going deep learning research works. Every researcher is also welcomed to share your research work and experience in using HPC/GPU resources.

Ph.D Student Deng Yong, Department of Electrical & Computer Engineering

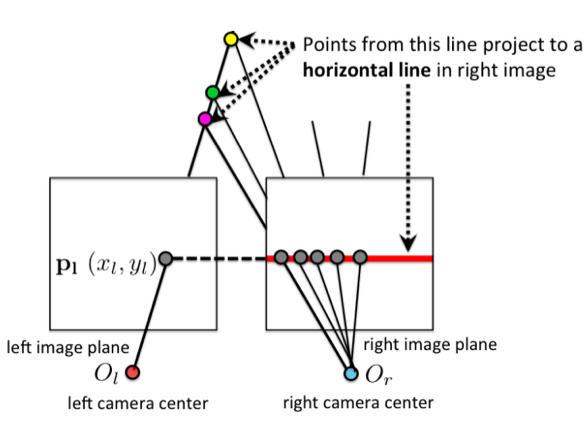

The research work’s focus is on stereo matching, which aims to recover 3D information from stereo images. The simplest setting is two cameras that are set side by side and facing forward, just like the below image.

Reference Image: http://www.cs.toronto.edu/~fidler/slides/2015/CSC420/lecture12_hres.pdf

Once find the corresponding point pairs between two images, the researchers can project the pixels on the 2D images back to 3D space (e.g. the green 3D point). Deep learning-based methods become prevailing in the stereo matching area. However, most of the existing works are computationally demanding. The goal of the research work is to improve the accuracy of stereo matching while keeping a low runtime. To achieve the goal, a lot of deep learning experiments will be performed.

The deep learning experiments are performed with Pytorch running on GPU system.

Ph.D Student Remmy Augusta Menzata Zen, School of Computing

Recently, machine learning algorithms, specifically neural networks, have been successfully applied for different applications in medicine, biology, finance and engineering. Such iconic successes indicate that these algorithms have proven to be in a position to address and solve hard and fundamental problems. The study of quantum many-body systems, i.e. quantum systems composed of many interacting particles, is also a challenging and impactful problem. Recent breakthroughs suggest that neural networks called “neural-network quantum states” can indeed yield performant algorithms and tools for the study of quantum many-body systems. This is seminally shown by Carleo and Troyer in a 2017 Science paper titled “Solving the Quantum Many-Body Problem with Artificial Neural Networks”.

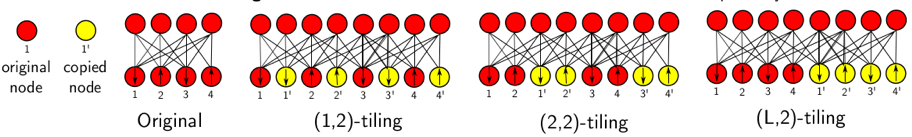

The purpose of this research work is to study the effectiveness, efficiency and scalability of neural-network quantum states for solving problems in quantum many-body systems. The existing code of neural-network quantum states is implemented in C++ with MPI support. The research team ported the code into TensorFlow library to exploit the use of general-purpose graphics processing units and then tested the code to find the quantum state with the lowest energy. The TensorFlow implementation runs 5 times faster and scales better than the existing implementation. Furthermore, the team proposed several physics-inspired transfer learning protocols for the scalability of neural-network quantum states. The protocols also improve the efficiency and effectiveness of the method. The team also proposed transfer learning protocol to find the quantum critical points that mark the quantum phase transition. The proposed protocol found the quantum critical point efficiently and effectively.

This picture illustrates the transfer learning protocols that the researchers proposed.

Ph.D Student Xu Ziwei, School of Computing

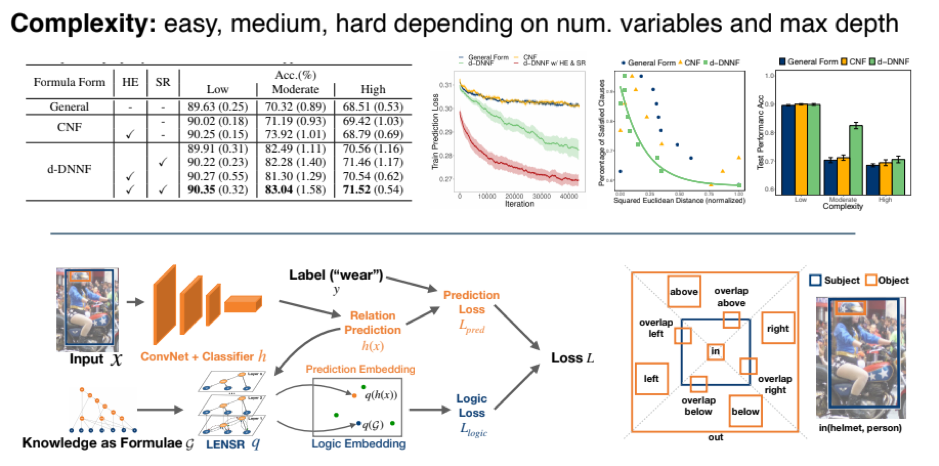

The researcher is currently working on computer vision. The objective of the research project is to combine the external knowledge into neural networks, and use it for scene understanding. Part of the training works was done on the NSCC’s AI system and it has been published on NeurIPS 2019.

The research team proposed Logic Embedding Network with Semantic Regularization (LENSR), which embeds prior symbolic knowledge to enhance deep models. The team proposed a graph embedding network that projects propositional formulae (and assignments) onto a manifold via an augmented Graph Convolutional Network (GCN). To generate semantically-faithful embeddings, the authors developed techniques to recognize node heterogeneity, and semantic regularization that incorporate structural constraints into the embedding. Experiments show that the proposed approach improves the performance of models trained to perform entailment checking and visual relation prediction. Interestingly, the authors observed a connection between the tractability of the propositional theory representation and the ease of embedding. Future exploration of this connection may elucidate the relationship between knowledge compilation and vector representation learning.

The below picture illustrates the experiments of the current study.

The published paper is accessible via https://arxiv.org/abs/1909.01161 and the poster https://drive.google.com/file/d/13mwJ6kPWj5XRsGpX-s9Xx-4X8AnDriMG/view.

Researcher Soumyadip Ghosh, Department of Computer Science

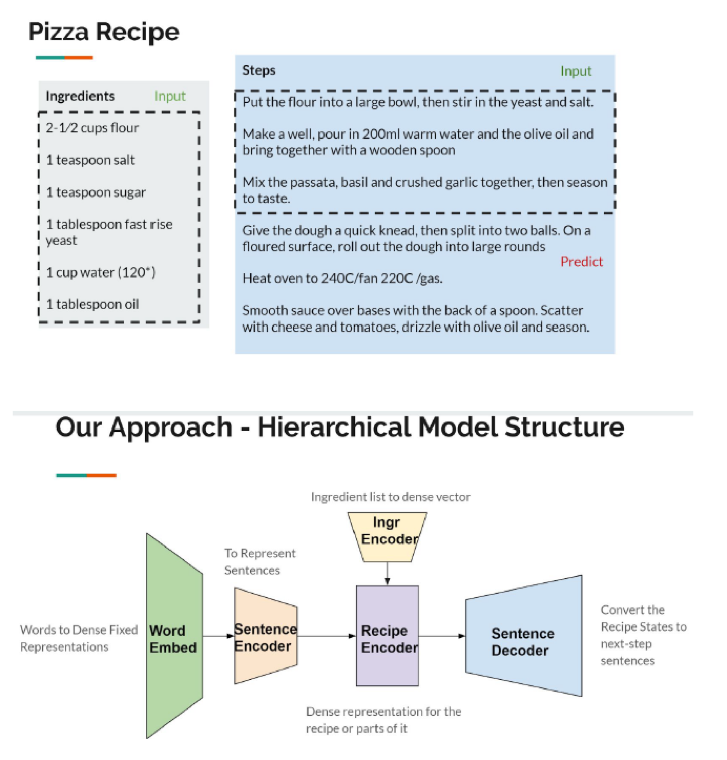

I carried out my Master’s Thesis project titled “Recipe Text Generation using additional domain specific losses” on the HPC Cluster. It dealt with Text Generation, a challenging task under the field of Natural Language Processing. More specifically, given ingredients and previous steps in natural language, can an AI system generate the next steps? We worked with a large scale recipe dataset Recipe1M consisting of 1 million recipes. We used hierarchical recurrent neural networks with each component encoding different parts of the recipe. During the project, I mainly used the central GPU queues, volta_login and volta_gpu, for testing and training my large scale models, respectively. I experimented with new features on the volta_login queue, while the volta_gpu queue was used for training on the full dataset. The dataset itself was quite large, but the data transfer was quite quick.

Our approach for this study is illustrated by this diagram.