HPC-AI & The New Normal: Self-swab ART Image Recognition System

Rikky Purbojati, Research Computing, NUS Information Technology

Much has been said about the convergence of HPC and AI in recent years. However, the emergence of the Covid19 pandemic had possibly kicked the convergence and its translational application into full gear. HPC-AI technology has been involved in every step of humanity’s effort to answer the most critical global challenge yet, climate change notwithstanding.

While the long-term impact and trajectory remains to be seen, it is undeniable that the advances in computing technologies (and other scientific fields) have allowed the responses to be swift and laser-focused. The characterisation of the virus was completed in months. The vaccines, also helped immensely by preliminary SARS vaccine work, were available in a year. These responses would not have been as timely had they occurred a decade earlier.

To better appreciate the finer points, we could break down its roles and involvements in these phases: detection, characterisation, response, and post-pandemic recovery.

Detection: Days before the WHO notified the public of a flu-like outbreak in China, a commercial AI-based health monitoring platform had sent a notification to its customer about the rise of unknown pneumonia cases and potential outbreaks in China. It does so by scouring news reports, government official announcements, and other digital sources to look for a potential cluster of unusual health cases. Given the number of documents that need to be inspected daily, an AI system removes the impracticality of having people reading them one-by-one.

Characterisation: Once the virus had been isolated, an international effort was on its way to sequence and reconstruct its genome. Knowing the genome sequences has allowed scientists to understand the potential origin, transmission profile, health impact, and eventually countermeasures against it. Genome sequencing & assembly, phylogeny tree construction, and protein annotation are all essential analyses greatly accelerated by HPC-AI infrastructures.

Response: On the back of the tremendous amount of resources thrown at it, the international response culminated in the availability of vaccines for general use. Top supercomputing facilities and cloud providers were dedicating a significant amount of computing time to help researchers model, simulate, and evaluate the effectiveness of potential vaccines/drugs when interacting with the virus. Today, we have multiple vaccine options with different mechanisms and modes of delivery that are proven effective in reducing the impact and alleviating the worst outcome of the virus. However, as we all know, the story does not end here. New mutations and strains have been observed, and some are more resistant to the available vaccines. As part of managing the spread of the new strains, HPC-AI resources are essential in tracking any emergent strains and helping devise better countermeasures against them.

Post-pandemic recovery: With no clear end in sight, some countries are planning to transit towards endemicity. Wide variation of public health policies, different levels of vaccines availability and uptake between countries, and emerging new strains significantly reduce the possibility of the virus simply disappearing. New policies and measures are geared towards minimising exposure and risk of infection while still allowing regular human activities to occur as close to usual – the new normal. In this space, HPC-AI could play a role in managing the endemic, such as contact tracing, identifying and fighting misinformation, virtual assistants for diagnosis, and other safety measures to guide them towards recovery. Close to us in Singapore, several ad-hoc and permanent safety measures have been enacted to control the spread of the disease on a national or localised level. Furthermore, some organisations with relevant expertise and requirements, such as NUS, have developed advance solutions that support the enforcement of the policies and ensure compliance from its members.

In this edition, I would like to share how the research computing’s AI team has been developing an HPC-AI solution to support NUS’s safe management measures on campus and how the implementation model can be adopted on a broader scale.

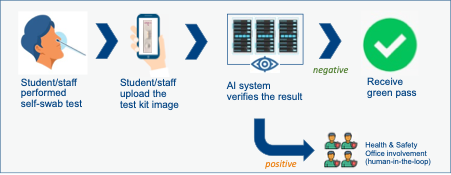

As most NUS community members are familiar with, uNivUS is the official NUS mobile application where students and staff can access useful NUS-related information and resources via their smartphones. The application has become a key enabling technology during the pandemic to ensure students and staff safety and comply with prevailing Covid19 restriction policies. Recently, as physical restrictions are becoming more relaxed, NUS has started to require its students and staff to perform self-swab antigen rapid test (ART) before visiting the campus to minimise potential community infection risk. Students and staff are required to perform the test at their residence and upload the test result image using the uNivUS application. Only after they have uploaded and declared a negative test result would they be allowed to visit the campus. However, in the background, the uNivUS applications have an image recognition module to perform an automated verification of the ART result. A positive result, if detected by the module, will trigger an alert to a human-in-the-loop for secondary verification and follow-up action. This system is designed to allow compliance and minimise the possibility of policy circumvention.

Figure 1 Self-swab ART reporting workflow

The research computing’s AI team implemented the image recognition module leveraging their AI/ML expertise to develop the algorithm and the HPC-AI infrastructure to run the codes on. From the algorithm point of view, accuracy performance is the cornerstone of a successful AI/ML system implementation. A real-world machine learning system will never achieve 100% accuracy but we can make certain assumptions when building the model. However, we will eventually need to decide the appropriate trade-off between precision and recall to avoid over-fitting and still be able to handle non-training data. In this implementation, the system is designed to favour false positives to false negatives considering the potential health impact. A false positive case would involve manually overriding the result, with no impact on public health while a false negative would result in a dire consequence. This would allow a potentially infected person (ART is not 100% accurate as well, they need to be confirmed with PCR test) to visit campus and spread the virus within their community. Looking at this scenario, a critical AI/ML system implementation will always need to be augmented with a human-in-the-loop system to guard against possible conditions that the system cannot accurately handle.

Simultaneously, response latency is also an essential aspect of the system to ensure a seamless end-user experience. In the age of instant gratification, a perceived delay in the process would lower user satisfaction and potentially lead to a lesser likelihood of compliance. To ensure an optimal response latency, the code needs to scale up and scale out. The ability to scale up is necessary, considering that the machine learning techniques used in the code can be compute-intensive. Scaling up to utilise more threads or GPU accelerators underpins the system’s ability to process an image and send the result back within the desired time window. Scaling-out ability spreads the workload to multiple servers with a scheduler or load balancer and would allow the system to handle a large number of concurrent requests. This scalability requirement is a perfect match to an HPC-AI infrastructure and would be well-served by it.

Figure 2 High-level architecture of Self-swab ART image recognition module

The high-level architecture of the Self-swab ART image recognition system is shown in figure 2. At the frontend, the uNivUS mobile application initiates the smartphone’s camera to take a picture of the ART’s result and upload the image to the backend application. The backend application would then perform a sequence of business logic and make an API call to the self-image recognition system hosted in the HPC-AI infrastructure. The result would be returned to the backend, and it will record the result and relevant metadata attached to the image. The final step is to send the processed result back to the uNivUS mobile application.

To delve into the design decision a little bit; the backend application consists of web application servers and a database typically hosted in an enterprise IT environment. Considering the relatively limited resources a web application server has, it is impractical to embed the image recognition code inside the backend code. As a result, the module was designed and developed with microservice architecture to provide the required scalability.

In an ideal implementation, a subsection of HPC-AI resources is dedicated to perform the image recognition workload. Each of these servers is equipped with a high CPU-core count and GPU accelerators. These servers are managed by an API load balancer, which acts as the interfacing layer between the image recognition module and the backend application. If a request arrives, the load balancer would assign the workload to a free server and pass back the result to the backend application. It is a typical HPC job submission pattern. However, another technical consideration needs to be made – as stated by Amdahl’s law, any speed-up is limited by the serial part of the program. In this case, a proper partitioning strategy is required to balance the speed-up gain with the total workload capacity. Having too low a resource allocated for a request would cause an unacceptable response latency, but having too high would mean diminishing speed-up gain and fewer concurrent workloads capacity. Even with GPU accelerators that promises blazing-fast processing time, one needs to properly partition the cards to achieve the targeted response latency. It is even trickier as there can only be so many partitions made on a GPU compared to a CPU.

In the context of technology development, this system’s implementation demonstrated a real-life example of convergence between HPC, AI, and enterprise IT. In the context of public health, the system showed a practical application of an image recognition system to support the safe management measures of a local institution. As is inevitable with any AI/ML system, there will always be a measure of false positive and false negative. Environmental factors such as lighting, camera quality, positioning, shakiness, and inherent algorithm bias contribute to the system’s general accuracy. Other well-established image recognition systems such as passport or thumbprint scanners have the advantage of a controlled environment where the device’s lighting and positioning are specifically designed to maximise accuracy. A QR reader software can easily detect a code as the pattern is simple and quite resistant to lighting issues as it only consists of white and black colours. It is not the case with this system. Pictures are generally taken in an uncontrolled environment with individual devices and multiple models of ART kits, creating additional parameters and noises that need to be handled by the system. Some works have been done to nudge and enforce users to take a good picture. Other works go to enhance the algorithm to be more resilient to noises. While it is not perfect yet, the system is helpful to alleviate the human burden of manually checking the results. This aspect is relevant for organisations where many people need to attend or gather in a relatively enclosed space, such as universities, schools, factories, etc. As we envisioned it, along with other management measures, an advanced version of this system can be part of the technology toolbox to ensure and support a safer NUS and even a broader application in Singapore.

If you are interested in knowing more or discussing this, you can write to dataengineering@nus.edu.sg or visit the HPC website for more information.