AR Tags and their Applications in Computer Vision Tasks

Ku Wee Kiat, Research Computing, NUS IT.

Introduction

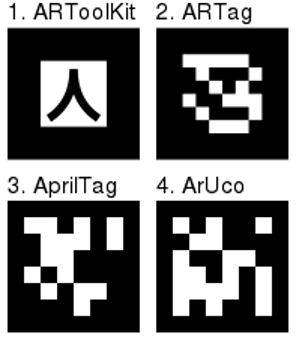

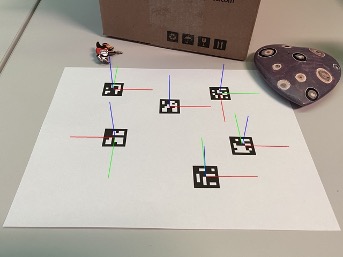

Augmented Reality Tags or AR Tags in short are commonly used for augmented reality applications. Commonly represented by a black and white square image with some patterns within a black border (some examples shown below):

There are many different types of AR tags, generated by different algorithms. Not all AR tag algorithms are equal as each have their own pros and cons. Some are less computationally intensive to generate and detect than others, while some are harder to detect than others at a distance. Some are not limited to black and white squared patterns, allowing some creativity in designing the looks of each tag.

There are many different types of AR tags, generated by different algorithms. Not all AR tag algorithms are equal as each have their own pros and cons. Some are less computationally intensive to generate and detect than others, while some are harder to detect than others at a distance. Some are not limited to black and white squared patterns, allowing some creativity in designing the looks of each tag.

AR tags can be used to facilitate the appearance of virtual objects, games, and animations within the real world. They allow video tracking capabilities that calculate a camera’s position and orientation relative to physical markers in real time. For augmented reality applications, once the camera’s position is known, a virtual camera can be positioned at the same point, revealing the virtual object at the location of the AR tag.

Here is an example: https://www.youtube.com/watch?v=iIhN3c7RjCI

Our focus today is not on the augmented reality applications of AR tags, instead we will be focusing on AR tags usage in computer vision tasks.

AR Tags in Computer Vision Tasks

ArUco markers provides you with (OpenCV library):

ArUco markers provides you with (OpenCV library):

- The position of its four corners in the image (in their original order).

- The id of the marker.

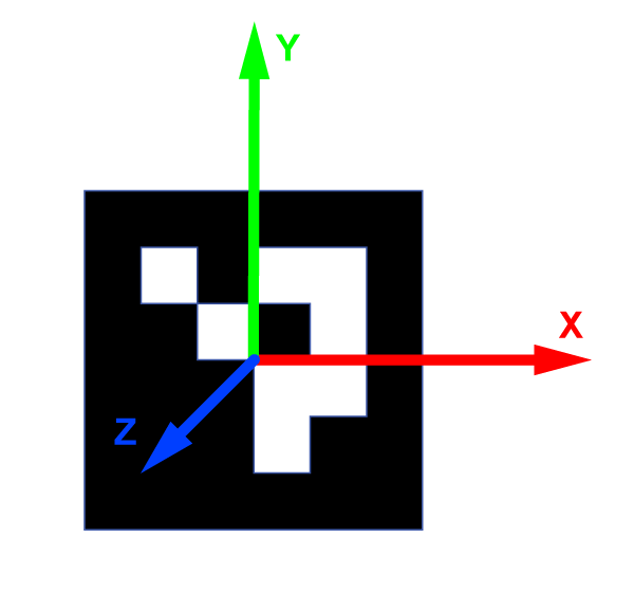

The small red square indicates the marker’s top left corner.

If you calibrate your camera, you’ll be able to obtain the pose (X,Y,Z axis) of the markers as well.

The information provided by the markers allows you to apply ArUco markers for tasks such as object tracking, pose estimation and related needs.

If you want to try it yourself, check out the following tutorials:

- Tutorial for generating ArUco markers in OpenCV and python.

- Tutorial for detecting ArUco markers in OpenCV and python.

Simple Task 1: Measuring Distance Between 2 ArUco Marker

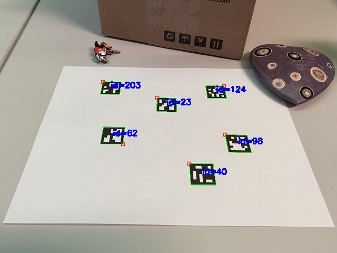

Given two ArUco markers and assuming they are on the same or similar (with acceptable error) object plane, we can trivially calculate the distance between the two markers.

We can measure the physical length or height of the printed ArUco marker. With this information, when a marker is detected, we can calculate its length or height in pixels and do a pixel to cm conversion. For example, if the physical height is 7 cm and the pixel height of the detected tag is 70px, each pixel on the same object plane would be approximately 0.1 cm. The pixel height of the detected tag can vary due to image noise or distortions.

We can then calculate the pixel distance between the two markers using the coordinates of the centre of each marker.

For example, if we obtain the pixel distance between the two markers as 700 px we can approximate the physical distance as 70 cm.

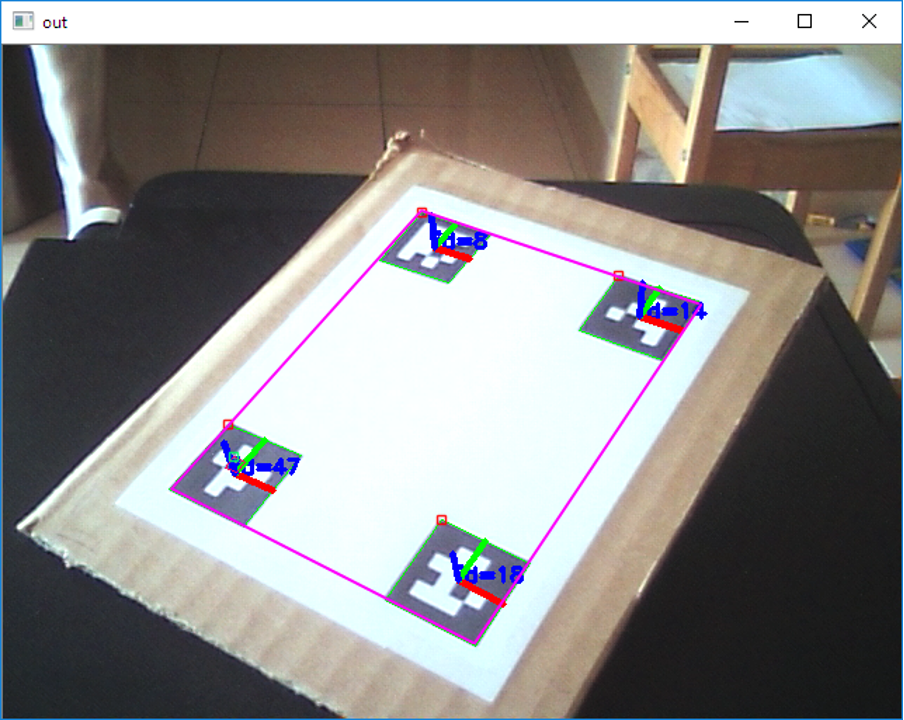

Simple Task 2: Zoning

With four ArUco markers, we can create a zone. It can be used to delimit a safety zone or forbidden zone depending on the task. Coupled with an object detector or simple motion detection, we can detect if the object or motion happens within the zone, and if so, trigger an alert.

Other Tasks

1. ArUco markers pose estimation in UAV landing aid system [2]

2. Indoor navigation using vision-based localization and augmented reality [3]

2. Indoor navigation using vision-based localization and augmented reality [3]

3. An Autonomous Landing and Charging System for Drones [4]

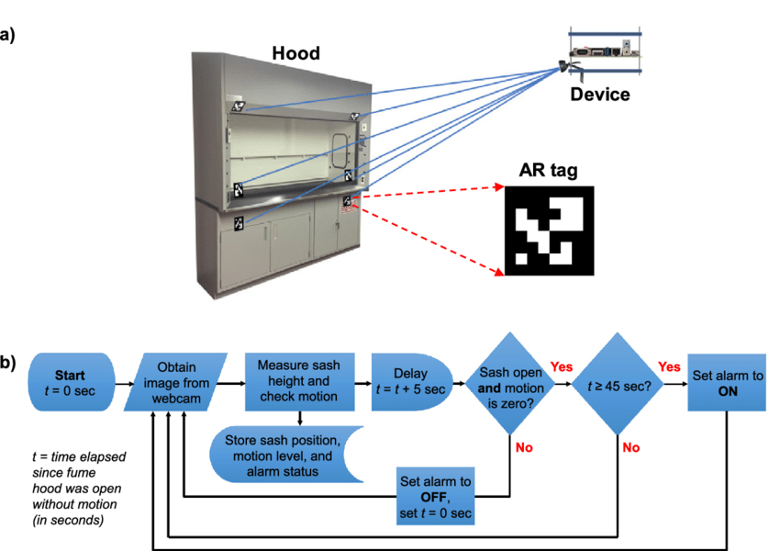

4. Active fume hood sash height monitoring with audible feedback [5]

References

[1] “ArUco: a minimal library for Augmented Reality applications based on OpenCV”, AVA, Universidad de Córdoba. Available: https://www.uco.es/investiga/grupos/ava/node/26 . [Accessed Apr. 13, 2021]

[2] Marut, A., Wojtowicz, K., & Falkowski, K. (2019). ArUco markers pose estimation in UAV landing aid system. 2019 IEEE 5th International Workshop on Metrology for AeroSpace (MetroAeroSpace), 261-266.

[3] Tim Kulich, “Indoor navigation using vision-based localization and augmented reality”, Uppsala Universitet, 2019

[4] Ziwen Jiang, “An Autonomous Landing and Charging System for Drones”, MIT, 2018

[5] Laura L. Becerra, Juan A. Ferrua, Maxwell J. Drake, Dheekshita Kumar, Ariel S. Anders, Evelyn N. Wang, Daniel J. Preston, Active fume hood sash height monitoring with audible feedback, Energy Reports, Volume 4, 2018, Pages 645-652