NVIDIA TESLA V100 GPU COMPUTING FOR DEEP LEARNING AND AI

In the current technological world, Artificial Intelligence (AI) and Deep Learning (DL) have become very hot topics catching the attention and excitement of many. Data analysts, data scientists, researchers and engineers are working relentlessly and also excitedly in making their AI and DL computation or training faster and more accurate by adopting all possible tools. There is no doubt that, Nvidia’s GPU processor, especially the latest Nvidia Tesla® V100 Tensor core, is the most powerful computing resources for AI and DL experts in 2018.

NUS users will be able to run their AI/DL related training on GPU systems powered by the latest Nvidia GPUs. This is easily accessed within campus. Please read on to find out more.

What are the GPU Systems to Be Introduced?

Five GPU servers will be introduced to support AI/DL trainings and research computation in NUS. The configuration of each GPU server includes:

• Four Nvidia Tesla® V100 GPU cards, powered by Nvidia Volta architecture with 640 Tensor cores, 5,120 CUDA cores and 32GB GPU memory each;

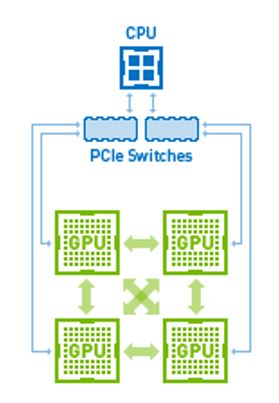

• NVLink interconnect for GPU cards, giving a interconnect bandwidth of 300GB/sec;

• DDR4 system memory of 384GB; and

• NVMeTM SSD storage with maximum 6TB usable space

In total, the upcoming five GPU servers will provide 20 GPUs for running AI/DL trainings. Using any one GPU server, all four Tesla® V100 GPU processors and total 128GB GPU memory can be utilised for a single complex training.

Why is GPU System “Good”?

NVIDIA® Tesla® V100 Tensor Core is the most advanced data center GPU built to accelerate AI and deep learning. The powerful computing performance is critical for data scientists and researchers to work on recognising speech when training virtual personal assistants and teaching autonomous cars to drive. These are complex challenges with AI and it requires tdeep learning training models that are exponentially growing in complexity. In addition, it demonstrates better performance for traditional High Performance Computing (HPC) applications and graphics visualisation as well. It also offers the performance of up to 100 CPUs in a single GPU.

Technical specifications and performace figures of Tesla® V100 GPU using NVLink interconnect are listed in the table below.

| Table 1 Technical specifications of Tesla® V100 GPU | |

|---|---|

| GPU Architecture | NVIDIA Volta |

| NVIDIA Tensor Cores | 640 |

| NVIDIA CUDA Cores | 5,120 |

| GPU Memory | 32GB |

| Memory Bandwidth | 900 GB/sec |

| Interconnect Bandwidth | 300 GB/sec |

| Single-Precision Performance | 15.7 TFLOPS |

| Double-Precision Performance | 7.8 TFLOPS |

| Tensor Performance | 125 TFLOPS |

| Compute APIs | CUDA, DirectCompute, OpenCL, OpenACC |

An in-house benchmark for a typical deep learning training (Workload: ResNet-20) between a CPU server with dual CPU processors (24 cores in total) and a Tesla V100 GPU was done recently. The comparison demonstrated that a Tesla V100 GPU is able to complete the deep learning training for about 9-12 times faster. Another benchmark for a deep learning inference case (Workload: ResNet-50) demonstrated that Tesla V100 is 47 times faster than one Xeon CPU (14 cores).

From the comparison, Tesla V100 GPU outperformed and cut down the computing time significantly for both deep learning training and inference. The outstanding performance enables deep learning users to do their training models faster, and with more accuracy.

When will the GPU Systems to be Available?

The installation of the GPU systems is in progress. The estimated timeline is stated below:

• The pilot phase, will be installed by end Dec 2018

• The actual production phase, by the end of January 2019

How to Access and Use the GPU Systems?

The GPU systems will be accessible for NUS users through the campus network. If your NUS account is not enabled on the central HPC system, please proceed to submit your application here.

AI/DL training can be carried out on GPU systems either in an interactive manner or batch automated manner. The former is mainly for testing and debugging or supervised trainings; while the latter is mainly for large-scale and complex trainings.

Popular deep learning and AI frameworks will be enabled for users, including, but not limited to, TensorFlow, Cafe2, Keras, Pytorch and others. More detailed instructions will be provided when the system is ready.

Who Can Access and Use?

Academic and research visitors can apply to use the systems so long they have project collaborations with an NUS professor.

The upcoming Tesla V100 GPU systems will be available for all NUS staff and students to access and use for AI/DL related training.