HOW FAST CAN AMBER AND GROMACS JOB RUN WITH P100 GPU ACCELERATOR

P100, the latest NVIDIA Tesla GPU series of accelerators, taps onto NVIDIA Pascal GPU architecture and delivers a unified platform for accelerating both High Performance Computing (HPC )and Artificial Intelligence (AI). A loaned set from HPE Company has been set up in the NUS IT Data Centre and tests have been carried out to explore its capability in AI and benchmark its performance in doing molecular simulations.

This article presents the benchmark results of running two popular molecular simulation software Amber and Gromacs on the server with four P100 GPUs. A comparison is also made with results obtained on the existing CPU and GPU servers at NUSIT and NSCC.

Hardware and Software

| The GPU Servers | |

|---|---|

| P100 GPU Server | 4x P100 GPUs, 2 x E5-2680 CPUs v4 @ 2.40GHz and 264GB RAM |

| NSCC GPU Servers | 1 x Tesla K40t, 2 x E5-2690 v3 CPU @ 2.60GHz and 164GB RAM |

| NUS IT GPU Server | 2 x Tesla M2090, 2 x X5650 CPU @ 2.67GHz and 49GB RAM |

| The CPU Servers | |

|---|---|

| Atlas8 cluster | 2 x E5-2650 v4 @ 2.20GHz and 198GB RAM |

| Atlas7 cluster | 2 x X5650 @ 2.67GHz and 49GB RAM |

| Atlas6 cluster | 2 x X5650 @ 2.67GHz and 49GB RAM |

| Atlas5 cluster | 2 x X5550 @ 2.67GHz and 49GB RAM |

| Benchmarking Software | |

|---|---|

| Amber16 | PMEMD implementation of SANDER, Release 16, CUDA 8.8 and GNU gcc 4.8.2 |

| Amber14 | PMEMD implementation of SANDER, Release 14, CUDA 6.5 and GNU gcc 4.8.2 |

| Gromacs 2016 | CUDA 8.0 and GNU gcc 4.8.5 |

| Gromacs 5.1.2 | CUDA 6.5 and GNU gcc 4.8.2 |

Benchmark Results

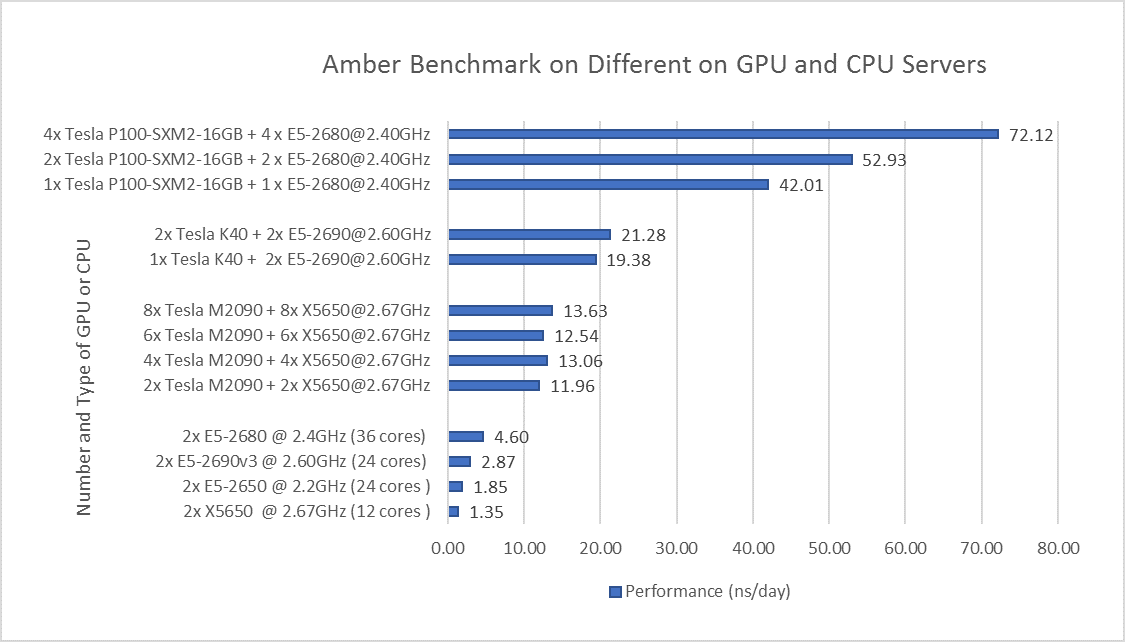

Amber Software Benchmark Results

The Amber Benchmarking Results on different GPU servers and CPU servers are presented in the following graph:

Some facts on running the Amber benchmark jobs:

- Jobs on P100 GPU server run within one node and jobs on K40 and M2090 GPU servers run within one node or multiple nodes with Message Passing Interface (MPI)

- All jobs on CPU servers run within one node using all the processor cores available

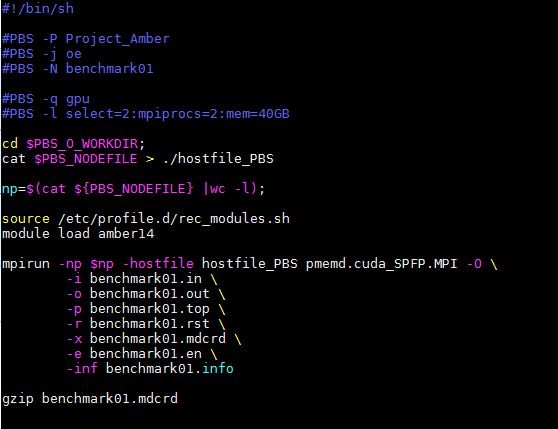

- The following is a sample script for submitting jobs to the NUS IT GPU servers:

- “select=2” defines the number of nodes on which the jobs will be dispatched. On each node the number of MPI processes are equal to the “mpiprocs” defined in the script. If there is more than one GPUs, the number of “mpiprocs” will be equally distributed on each GPU. For example, in the above example, with “select=2”, “mpiprocs=2” and with two GPUs on each node, one pmemd.cuda_SPFP.MPI process will be running on each GPU.

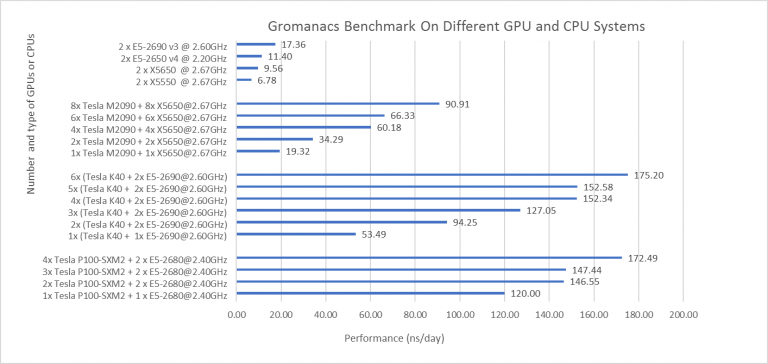

Gromacs Software Benchmark Results

The Amber Benchmarking Results for using different GPU servers and CPU servers are presented in the following graph:

Some facts about running the Gromacs benchmark jobs:

- Jobs on P100 GPU server run within one node and jobs on K40 and M2090 GPU servers run within one node or multiple nodes with MPI

- All jobs on CPU servers run within one node using all the CPU core available

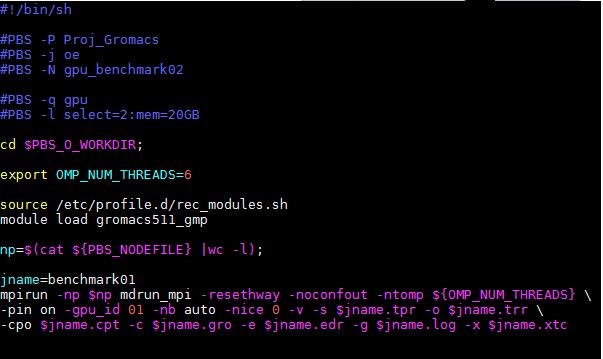

- The following is a sample script for submitting jobs to NUS IT GPU servers:

- “select=2” means submitting jobs to two compute nodes, and a total of 2 MPI processes will be started on GPU 0 & 1 by specifying “-gpu_id 01”. Since “OMP_NUM_THREADS=6”, 6 OpenMP processes will be sprouted on each MPI processes.

What we have learnt:

- If only one GPU is engaged, Amber and Gromacs jobs can run more than 10 times faster on the P100 GPU server than on a pure CPU node.

- On the P100 GPU server, the performance does not scale in a linear way if the number of GPUs used increases from 1 to 4. For Amber jobs, there is an increase in the performance if more GPUs are used, but for Gromacs jobs, there is no substantial performance increase even when the jobs run with 2, 3, or 4 GPUs.

- On the NUSIT GPU servers (Gold Cluster) with two M2090 GPUs on each node, Gromacs job performance scales quite well but Amber job performance does not scale. Users are advised to run your own benchmark tests to determine the optimal number of nodes/GPUs required.

- The combination of “one MPI process with one GPU” always gives the optimal performance. This is true for both Amber and Gromacs. When submitting jobs using PBS, users tend to specify a number to “mpiprocs” that is the same as the number of cores available on one node. For example, on the P100 GPU server, if the total number of core is 28, “mpiprocs” will normally be specified as 28. However if an Amber job runs with 4 GPU and mpiprocs=28, the performance is only 8.93 ns/day, but if the “mpiprocs” is specified as 4, the performance will be 72.12 ns/day!