MY EXPERIENCE WITH HPC PAY-PER-USE SERVICE

With simulation codes nowadays running in parallel using more and more grid cells, there is a constant need for ever increasing computing power. Simulation codes running a few hundred CPUs are now commonplace. In some computational fluid dynamics (CFD) codes running 3D direct numerical simulations (DNS), the computing power required can span across thousands of CPUs.

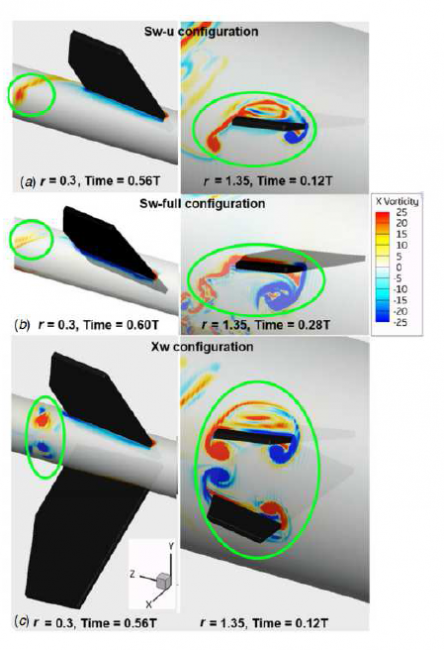

At NUS HPC (High Performance Computing), the maximum number of CPU allowed for a job at atlas7 is 48. This is sufficient for most users, who run 2D or small 3D jobs and requires much less CPUs. However, for power users, this is certainly not enough. At Temasek Laboratories, we frequently perform 3D CFD simulations requiring over a hundred CPU. One example is our flapping wing simulation, as shown in Figure 1, which tries to compare the vortices generated due to different wing configuration (Tay, van Oudheusden, & Bijl, 2014). For this simulation, we require around 80 to 100+ CPUs, depending on the resolution.

Where can we find the computational resources given that the CPU limit by NUS HPC is only 48? One solution is to purchase our own HPC cluster, which can cost a hefty sum of $100,000 or more. Moreover, the cluster also requires constant maintenance and support. If the cluster is utilized 24/7, this will be the most economical solution. On the other hand, if the cluster is not heavily utilized, an alternative answer is the HPC pay-per-use service. Unlike the normal usage which is free, the HPC pay-per-use service charges according to the number of CPUs and duration of usage. This service is more cost effective for our ad-hoc usage. For example, subscribing 96 CPU-cores through the HPC pay-per-use service costs about $1,000+ for one month while the one-time investment of an equivalent cluster will cost close to $100,000. Compared to the cost of installing our own HPC cluster, the cost of the HPC pay-per-use service is very attractive. For our current cluster usage, we feel that this is a more viable solution.

There are a few things to note though. Firstly, the nodes are not available to the end user immediately after reservation. There is a need to wait an hour before it is available since the system needs to allocate the resources. Hopefully, the waiting time can be shortened in the future. Moreover, for large nodes reservations, the waiting time can be longer, depending on the available resources. Secondly, some codes which run well on previous clusters such as altas7 may not work on the pay-per-use clusters. Some troubleshooting may be required. Luckily, new users may be given some free time to do some code testing before the actual charging commences. Interested users can contact the staffs at HPC for more information.