EFFECTS OF NUMBER OF CPU CORES ON VOLTA CLUSTER DEEP LEARNING JOBS EXECUTION TIME

Introduction

With the introduction of the new V100-32GB Volta Cluster to NUS HPC users in mid-February we have seen an increasing number of users interested in running their deep learning code on the latest GPUs in the cluster.

Most if not all deep learning workload are on image-based applications, for example image classification, generative adversarial networks and object detection. The dataset for these applications can be as simple as MNIST or CIFAR10 with an image width and height of less than 50 pixels each side. It can be as big as Imagenet or even bigger for generative, segmentation and detection datasets (more than 500 pixels per side).

With the large memory capacity and faster processing cores of the V100-32GB, users may think that they are able to get better performance by increasing their batch sizes or loading more images at the same time. Through monitoring the GPU utilisation and debugging sessions with users, we have found out that it might not be true.

Bottlenecks and inefficiencies in the training pipeline become obvious when the GPU is not the limiting factor. We conducted a few experiments and took a look at data loading code of Keras/Tensorflow to discover inefficiencies and various methods to improve them.

Bottlenecks in the Pipeline

Data from datasets residing on storage travels through different medium and undergoes various processing before it is ready to be loaded into the GPU Memory.

Storage & Network

In NUS HPC Cluster, users usually store their dataset in a NFS NAS system (/hpctmp). The storage is mounted on all login (e.g.: Atlas9-c01) and compute nodes (Volta01 to Volta05) via 10Gbit ethernet.

Alternatively, users can copy their data to the local scratch directory in each Volta node. Each node has its own scratch directory that is only accessible within the node. The scratch directory comprises 5.6TB of nvme storage. The SSD is connected via PCIe Gen3 x8, which has much more bandwidth compared to 10GBE. Being a nvme drive, it has much lower latency compared to the NFS NAS system.

Filesystem Size Used Avail Use% Mounted on /dev/nvme0n1p3 5.6T 150G 5.5T 3% /scratch

CPU

For data residing as jpg/png files or requiring augmentation, the CPU plays a large part.

If you are using Keras or plain Tensorflow for loading jpg/png images, the CPU does the image decoding and preprocessing (augmentation) by default. Imagine loading batches of 128 large images at the start of every epoch, the CPU would have to first decode and transform all 128 images before feeding it into GPU as an entire batch. This process would take minutes with high CPU utilisation and 0% GPU utilisation. The entire training process is delayed when each batch requires a few minutes of CPU pre-processing followed by a few seconds of actual GPU processing.

There are ways to speed it up of course.

- Convert dataset to TFRecords (Keras/Tensorflow) or other binary formats (e.g.: lmdb)

The conversion is only done once before training and the converted file(s) can be used for future training sessions. The conversion creates a few big files (TFRecords/lmdb) vs multiple small files if left as jpg/png files. Each jpg/png file is decoded before saving into TFRecords/lmdb, this removes the decoding step when reading each jpg/png image during training time.

Using a binary file format like TFReocrds for storage of your data takes up less space on the disk, less time to copy and can be read more efficiently from disk as compared to multiple image files in directories.

- Preprocessing next few batches of data while current batch is processing in GPU

In Keras, users would be using a DataGenerator to read from a directory and fit_generator to start the training. The fit_generator method has 3 parameters you can adjust to try to speed up data loading

• max_queue_size: Integer. Maximum size for the generator queue. If unspecified, max_queue_size will default to 10. Specifies the number of batches to prepare in the queue in advance.

• workers: Integer. Maximum number of processes to spin up when using process-based threading. If unspecified, workers will default to 1. If 0, will execute the generator on the main thread.

• use_multiprocessing: Boolean. If True, use process-based threading. If unspecified, use_multiprocessing will default to False. Note that because this implementation relies on multiprocessing, you should not pass non-pickable arguments to the generator as they can’t be passed easily to children processes.

Read more here https://keras.io/models/model/#fit_generator

Unfortunately, the built-in ImageDataGenerator is not thread safe, so using multiple workers might cause repeats in the loaded data and if you use_multiprocessing=True it will throw an error. Read more on implementing a thread safe DataGenerator here https://stanford.edu/~shervine/blog/keras-how-to-generate-data-on-the-fly

Using these 2 options in addition to requesting adequate amount of CPU cores will speed up the data loading process.

Increasing the maximum queue size will increase the amount of data being prepared to load into the GPU. Without workers or multiprocessing, this data preparation process is single threaded and would not be efficient.

Experiments

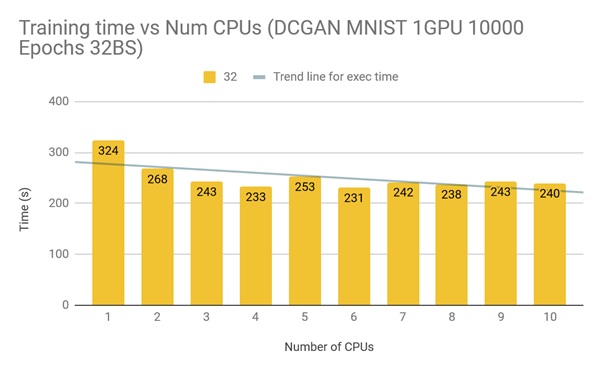

DCGAN was trained for 10000 epochs on MNIST dataset stored in numpy binary format loaded entirely into the main memory with a batch size of 32.

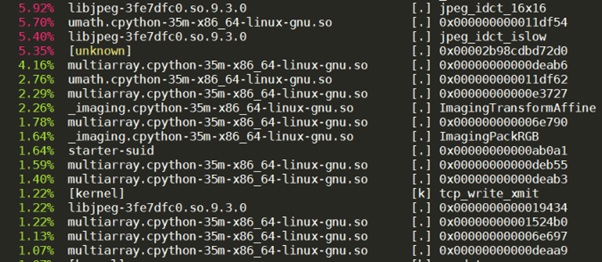

Encoding and Decoding of Images

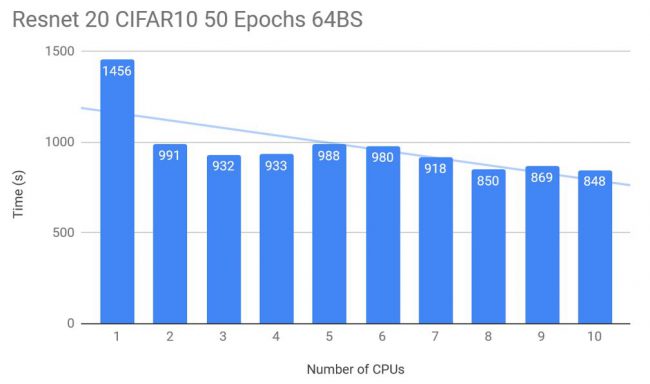

A Resnet20 classifier was trained on CIFAR10 stored in numpy binary format loaded entirely into the main memory for 50 epochs with a batch size of 64.

The above two experiments show that even on simple datasets, an increase in the amount of CPU cores requested positively affects the training time of the neural network regardless of its architecture.

Conclusion

If you are using Keras/Tensorflow, it is recommended that you either implement your own thread safe DataGenerator class to enable multiple workers or multiprocessing to obtain some speed up in data pre-processing so as to reduce the bottleneck to the GPU. Alternatively, prepare your dataset in TFRecords (Tensorflow/Keras) or lmdb (pytorch/caffe) format before training to skip the image decoding process entirely and optimise data loading.