ACHIEVING HIGH PERFORMANCE THROUGH BENCHMARKING AND COMPILATION OPTIMIZATION

Introduction

Over the years, many open-source software packages for scientific research have been developed and are widely accepted by research community. As these packages are powerful and free of charge, they have become more popular. End users just need to download, compile and install the open-source software on their computing system and use it. Normally compiling and making it work is the first thing users need to do, but optimizing the compilation to achieve high performance is more important and challenging.

Gromacs is such an open source software package widely used in life science research area. The number of users in NUS has been increasing in the last few years. Recently we have done a performance benchmark testing for the Gromacs using the model created by Dr. Krishna Mohan Gupta in Assoc Prof. Jiang Jianwen’s group at the Department of Chemical and Biomolecular Engineering. We’ve learnt that the computing performance can be improved significantly not only by using Intel compiler for optimization, but also by using larger scale of parallel computing. The performance between single-precision solver and double-precision solver was benchmarked and it was found that the single-precision solver out-performs about 40-60% faster.

Benchmark Tests and Results

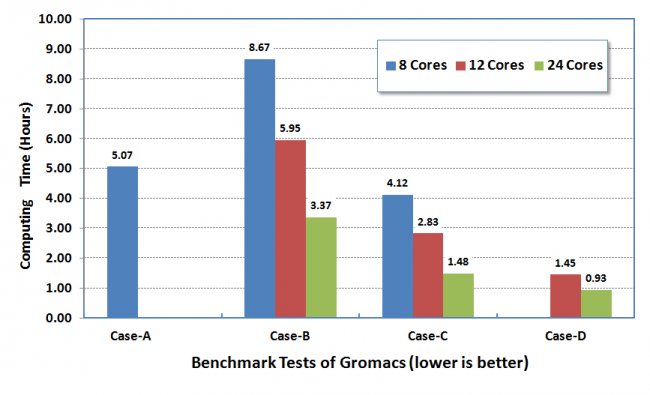

The benchmark tests were carried out using one of Dr. Gupta’s actual models for his research on a few HPC Linux clusters including an in-house built Linux cluster in Prof Jiang’s lab. The job took less than 5 hours to finish when 12 threads (12 cores on one compute node) or 24 threads (24 cores on two compute nodes) were specified for parallel execution. To make a fair comparison, jobs with 8 threads (8 cores) were also executed on the HPC Linux clusters as Prof Jiang’s cluster nodes have 8 processor cores on each compute node.

The table below shows the five sets of tests with corresponding configuration of application module, Gromacs version, compiler, precision type and execution cluster.

The benchmark tests were carried out using one of Dr. Gupta’s actual models for his research on a few HPC Linux clusters including an in-house built Linux cluster in Prof Jiang’s lab. The job took less than 5 hours to finish when 12 threads (12 cores on one compute node) or 24 threads (24 cores on two compute nodes) were specified for parallel execution. To make a fair comparison, jobs with 8 threads (8 cores) were also executed on the HPC Linux clusters as Prof Jiang’s cluster nodes have 8 processor cores on each compute node.

The table below shows the five sets of tests with corresponding configuration of application module, Gromacs version, compiler, precision type and execution cluster.

| Notation | Module | Version | Compiled with | Precision | Cluster |

|---|---|---|---|---|---|

| A | Gromacs453_che | 4.5.3 | Intel Compiler | Double-precision (dp) | In-house |

| B | Gromacs465_gmp | 4.6.5 | GNU Compiler | Double-precision (dp) | HPC |

| C | Gromacs504_im | 5.0.4 | Intel Compiler | Double-precision (dp) | HPC |

| D | Gromacs504_im | 5.0.4 | Intel Compiler | Single-precision (sp) | HPC |

The computing times required for the model are plotted in this figure for comparison.

Taking Case-A (Gromacs453_che with double-precision solver built on the in-house cluster with Intel compiler, running with 8 cores only) as the base case for comparison, the following findings are observed from the results of the benchmark tests:

- Case-B (Gromacs456_gmp with double-precision solver on HPC cluster, compiled by GNU compiler) completes about 1.6X slower than the base case when running with 8 cores; In contrast, Case-C (Gromacs504_im with double-precision solver on HPC cluster, compiled by Intel compiler) completes 1.2X faster.

- Running with 12 cores, Case-B still completes 1.2X slower while Case-C completes almost 1.8X faster.

- Running with 24 cores, Case-B can complete 1.5X faster and Case-C can complete 3.4X faster.

- Performance improvement offered by the single-precision solver is demonstrated in Case-D. In general, it runs 3.5X and 5.4X faster than the base case; it also runs 1.6-1.9X faster than the equivalent double-precision solver for both test runs with 12 and 24 cores.

The following conclusions can be drawn from the findings.

• Gromacs solver built and optimized with Intel compiler executes faster and helps to cut short the computing time,

• Gromacs demonstrates good scalability running on large number of cores, and

• Single-precision solver performs about 60-90% faster than the equivalent double-precision solver.

Recommendation

We recommend that users submit and run their jobs with the module gromacs504_im which is the latest version Gromacs 5.04 compiled and optimized using Intel compiler. A sample job submission script is attached below. Comment off or delete the line for double-precision solver if you prefer to run the single-precision solver; vice versa.

#!/bin/bash

#BSUB -q parallel

#BSUB -n 24

#BSUB -o stdout_24c.o

#BSUB -R “span[ptile=12]”

source /etc/profile.d/modules.sh

module load gromacs504_im

# single-precision solver

mpirun -np ${LSB_DJOB_NUMPROC} -f ${LSB_DJOB_HOSTFILE} "mdrun_mpi ...”

# double-precision solver

mpirun -np ${LSB_DJOB_NUMPROC} -f ${LSB_DJOB_HOSTFILE} "mdrun_mpi_d...”

You are also recommended to run your jobs with more cores within the resource limit to achieve better speedup and shorter computing time. In addition, you can run single-precision solver to further improve the performance if the simulation does not request running on double-precision solver (command with postfix _d).

Appendix

Below is the list of optimization flags used in the Gromacs software compilation.

| Module | CMake Flags |

|---|---|

| Gromacs465_gmp(GNU compiler) |

-DGMX_SIMD=AVX_256 \ -DGMX_OPENMP=on \ -DGMX_GPU=off \ -DGMX_MPI=on \ -DGMX_THREAD_MPI=on \ -DGMX_MPI=ON \ -DCMAKE_C_COMPILER=mpicc \ -DCMAKE_CXX_COMPILER=mpicxx \ -DGMX_FFT_LIBRARY=mkl\ -DGMX_DOUBLE=ON |

| Gromacs504_im(Intel Compiler) |

-DGMX_SIMD=AVX_256 \ -DGMX_OPENMP=on \ -DGMX_GPU=off \ -DGMX_MPI=on \ -DGMX_THREAD_MPI=on \ -DGMX_MPI=ON \ -DCMAKE_C_COMPILER=mpicc \ -DCMAKE_CXX_COMPILER=mpicxx \ -DGMX_FFT_LIBRARY=mkl |

| Gromacs504_im(double precision version with Intel Compiler)) |

-DGMX_SIMD=AVX_256 \ -DGMX_OPENMP=on \ -DGMX_GPU=off \ -DGMX_MPI=on \ -DGMX_THREAD_MPI=on \ -DGMX_MPI=ON \ -DCMAKE_C_COMPILER=mpicc \ -DCMAKE_CXX_COMPILER=mpicxx \ -DGMX_FFT_LIBRARY=mkl\ -DGMX_DOUBLE=ON |