LARGE DATA TRANSFER WITHIN NUS CAMPUS

Introduction

Data transfer over internet has become a norm nowadays such that many people move data everyday by using mobile apps and consuming cloud services like Dropbox, iCloud, and so on. It is not surprising some of you move ten megabytes (MB) to a few hundred MBs of data on internet a day.

However, for research, large data transfer over network remains a challenge due to the need to move large amount of data around within a short time to meet the data analyses demand. The size of data is in the scale of hundreds of gigabytes (GB) or even a few terabytes (TB). In a recent case, a researcher raised a request to transfer 200GB of data every week from the department’s storage system onto the central HPC systems for analyses. A fast and reliable data transfer protocol for such research request is very crucial.

Data transfer method

The method of data transfer various with the local client of which the data is hosted.

If data is hosted on a Windows-based computer, secure file transfer protocol using tools like SSH file transfer utility (sftp) or Filezilla is the common tool. This method is secure but incurs overhead for data encryption in both the local client and the remote HPC system. Similarly secure copy (scp) is commonly used for data move from a Linux-based client to the HPC system. In this situation, overhead will also incur in both ends by scp. Hence, the data transfer speed using common data transfer tools (sftp and scp) is hampered and the performance is not accepted by researchers.

Is there a way to have a common storage space that can be shared on both department’s local computer and the central HPC system, so that the data move can be transparent and straightforward? Thanks to the Utility Storage service, which provisions on-demand storage to be mounted over the campus network on either Windows-based or Linux-based computer, researcher can consider subscribing and using it for large data transfer onto the central HPC systems. The idea is to mount the subscribed on-demand storage via network file system (NFS) protocol over the campus network to both local client and the central HPC system, so the data can be copied directly onto the on-demand storage. As NSF protocol is operated in system-level and data is moved directly from the client into the storage system, data transfer via NFS copy is faster and very reliable. The method puts no load to the HPC system during the data transfer; therefore no overhead imposes to the HPC system during data transfer.

Benchmark of Data Transfer

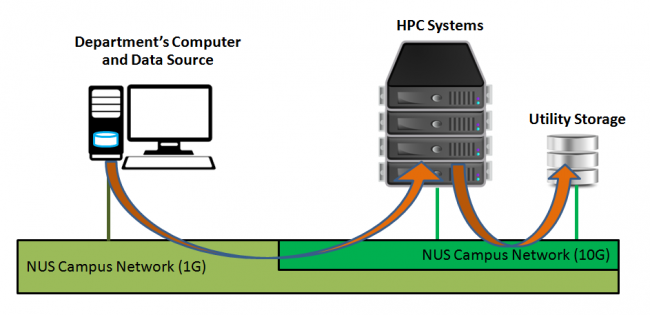

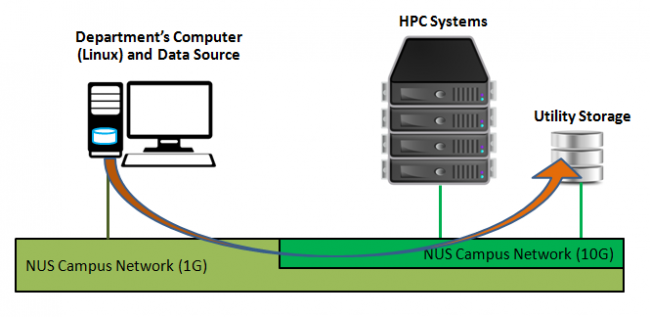

The following diagrams illustrate the difference of data transfer between Approach (A) using secure file transfer (sftp or scp) and Approach (B) using NFS copy.

A benchmark test was carried out based on the two different approaches for moving 3 different data sets sized 1GB, 10GB and 50GB, respectively, from a local computer onto the on-demand storage provisioned. The on-demand storage volume is also made available on the HPC system and ready for analyses. The data transfer results are tabulated as below.

| Data size | Data Transfer Timing (second) | |

| Approach (A): ssh or scp | Approach (B): NFS copy | |

| 1 GB | 32 | 11 |

| 10 GB | 332 | 108 |

| 50 GB | 1650 | 531 |

| 200 GB *(estimated) | 6600 * | 2124 * |

The results clearly show that data transfer speed for Approach (B) is consistently 2 times faster than that for Approach (A). Based on estimation, it will take about 35 minutes to move 200GB data onto HPC system using Approach (B), while it will take 2 hours using Approach (A). The researcher fed back that the performance of data transfer via NFS copy is acceptable.

Summary

Large data transfer via NFS copy approach provides a faster and reliable solution for researchers to consider by using the on-demand utility storage provisioned over the campus network.